Welcome to the THANNA Project

Neural networks are revolutionizing the world of image recognition,

image generation and language interpretation. But as their complexity

increases, so does the need for computing power for fast inference times.

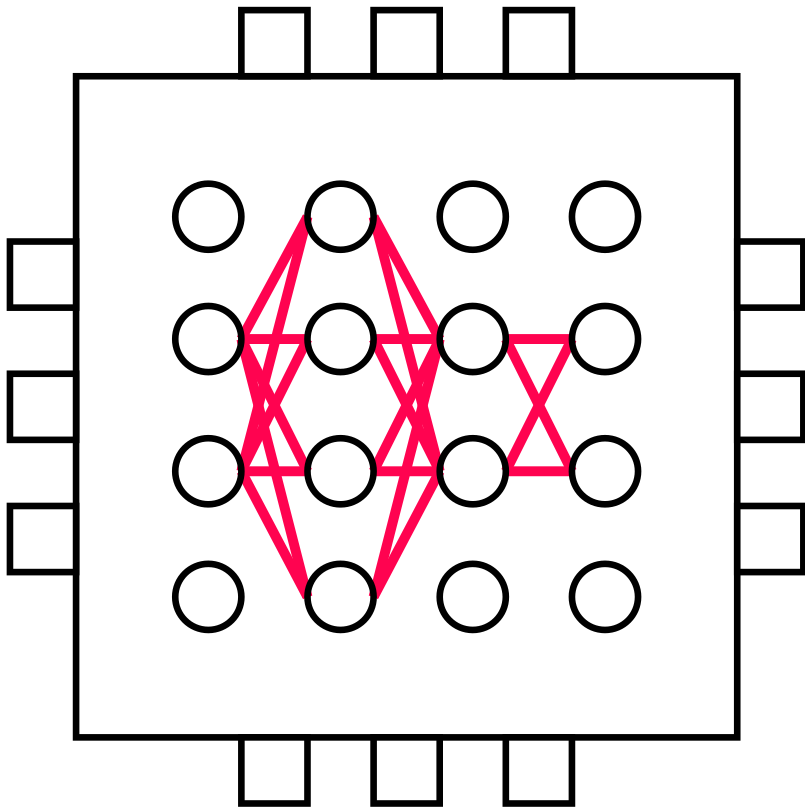

Programmable logic in the form of FPGAs are particularly well suited

as a platform for this application: their programmability allows

the hardware to be optimally adapted to the algorithms and thus

neural networks can be accelerated very efficiently.

The THANNA project (TH-Augsburg-Neural-Network-Accelerator)

aims to develop a new, open framework that accelerates the inference

of neural networks using programmable logic (FPGAs).

THANNA consists of ...

- ... software that is used to optimize neural networks (language: Python)

- ... an embedded Linux driver that communicates with the hardware accelerator (languages: C, Python)

- ... efficient and flexible accelerator hardware implemented in programmable logic (languages: TCL, VHDL).

The project started in 2023 and currently involves several enthusiastic students who are working on project work or theses.

Additional Information

Students Aware!

Would you like to be part of this exciting project? Or are you interested

in another way of participating in the project? We invite you to join us

and help shape the future of the THANNA project - for example as part of

your thesis, project work or elective subjects.